Planet SuperRes: A Technical Overview

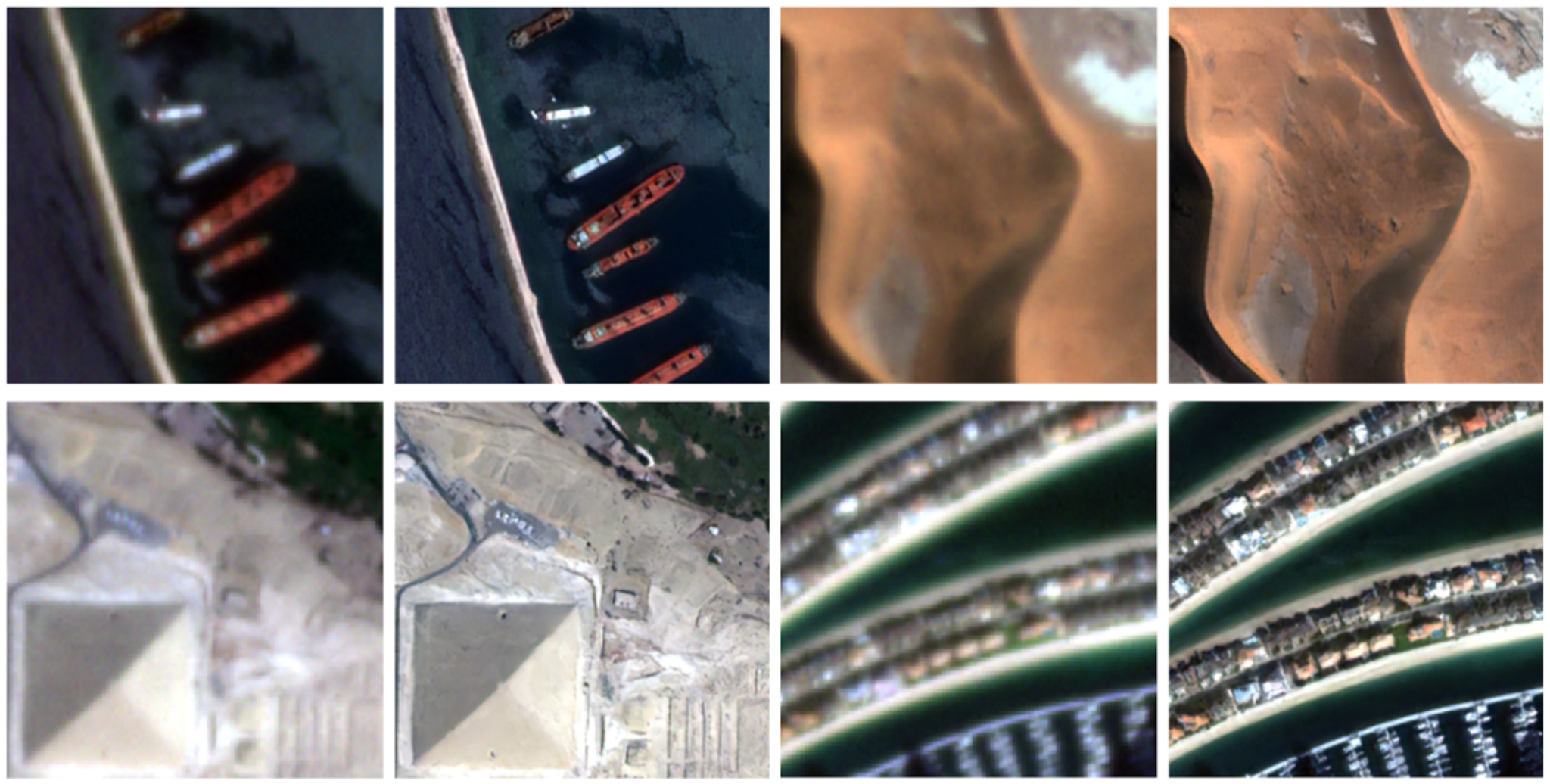

Four pairs of PlanetScope images, with 3 m resolution on the left and 1.5xSuperRes 2 m on the right.

TechArticle contributors: Justin Davis, Vinicius Perin, Ramesh Nair, and Kirk Bloomquist.

Planet SuperRes is our AI-powered image enhancement product that upscales 3 m PlanetScope® imagery to a 2 m resolution prediction. Super-resolution methods allow us to enhance image clarity, suppress noise, and achieve finer spatial detail. Enhanced image quality can be helpful for various imagery analysis tasks, agricultural monitoring, forestry, and defense and intelligence applications. In an earlier blog post we explored what makes the SuperRes model special, and how it can be used.

In this article, we assess the effectiveness of an Enhanced Super-Resolution Generative Adversarial Network (ESRGAN) model (Wang et al., 2018) for a 1.5x scaling factor. Our evaluation leverages both quantitative metrics and qualitative analysis to provide a comprehensive understanding of the model's strengths and limitations. While the results demonstrate the potential for generating visually realistic and high-quality outputs, challenges remain in reconstructing fine details and small features, which are critical for certain applications.

The Planet120K Dataset

The performance of any supervised learning model is fundamentally dependent on the quality and scale of its training data.The primary differentiator of Planet SuperRes is the Planet120K dataset, a massive, proprietary collection of co-registered, co-collected satellite image pairs.

Dataset Composition. The dataset consists of over 120,000 image pairs, each comprising:

- A medium-resolution image: A 3 m PlanetScope scene.

- A high-resolution ground truth image: A 0.5 m SkySat® scene of the same location.

For both data sources, we utilized surface reflectance products, with PlanetScope offering 8 spectral bands and SkySat providing 4. For the production version of the model we leveraged our 4 band PlanetScope surface reflectance product as an input (Blue, Green, Red, NIR) and observed no decrease in performance.

Curation Process. To ensure high fidelity, each pair was curated with strict quality controls.

- Temporal Consistency: Each PlanetScope/SkySat pair was captured within a 12-hour window to minimize temporal changes on the ground.

- On-Nadir: All SkySat images have a maximum off-nadir angle of 4 degrees so minimal geometric distortion was introduced relative to our near-nadir PlanetScope images.

- Geometric Alignment: We employed phase correlation for sub-pixel co-registration, ensuring precise spatial alignment between low-resolution and high-resolution images. Pairs with a phase correlation error exceeding 0.2 were discarded.

- Radiometric Normalization: Both SkySat and PlanetScope have surface reflectance atmospheric correction applied. After correction, an additional per-pixel based approach was used to normalize the SkySat imagery to match the PlanetScope spectral response, minimizing radiometric shifts that could distort the output radiometric accuracy.

- Clarity: A minimum clear-sky requirement of 95% was enforced for both images, effectively eliminating artifacts from cloud cover.

This rigorous curation process makes the Planet120K dataset a robust foundation for training high-fidelity super-resolution models.

Methodology and Model Architecture

We employ the Enhanced Super-Resolution Generative Adversarial Network (ESRGAN) (Wang et al., 2018) to perform super-resolution. ESRGAN, introduced in 2018, remains a highly regarded generative AI approach for single-image super-resolution due to its ability to produce visually realistic and high-quality results. The key characteristics of ESRGAN include:

- Residual-in-Residual Dense Blocks (RRDBs): These blocks replace traditional residual blocks, providing better feature representation and improved learning stability by incorporating dense connections and residual scaling.

- Perceptual Loss Function: ESRGAN combines pixel-wise losses (e.g., Mean Squared Error) with perceptual loss derived from feature maps of a pre-trained network, prioritizing visual realism over exact pixel accuracy.

- Generative Adversarial Training: The network employs a discriminator to enforce realistic textures and fine details, enabling sharper outputs compared to traditional methods.

- High-Fidelity Detail Restoration: ESRGAN emphasizes restoring fine details, making it particularly effective for applications requiring sharp and artifact-free images.

The Confidence Layer

A key innovation in Planet SuperRes is the Confidence Layer, an auxiliary output channel that provides a per-pixel estimate of the model's certainty. This layer predicts the expected Mean Absolute Error (MAE) between the generated super-resolved image and a hypothetical ground truth. We modify the ESRGAN architecture and the expected MAE is learned during training based on our expansive training dataset. Expected MAE is then predicted at inference time using only the low-resolution input image.

Expected MAE is learned by the network by adding a secondary output head to the generator network. This head is trained concurrently with the primary super-resolution task, using an L1 loss function to minimize the difference between the predicted MAE and the actual MAE observed during training.

The raw expected MAE output is then inverted and rescaled to a more intuitive 0-100 confidence score using the following formula, where a higher value indicates higher confidence (lower predicted error):

Confidence = 100 * 1 - min((MAE / 2500), 1)

The final confidence layer is valuable for assessing the degree of distortions and hallucinations generated by the super-resolution process.However, the confidence layer also reflects other sources of error, including:

- Radiometric Error: Differences in radiometry between the PlanetScope and SkySat image pairs used in training can appear in the confidence layer.

- Georeferencing Error: Errors in georeferencing, particularly magnified over small, bright targets when comparing SkySat and PlanetScope imagery, can contribute to the confidence layer's values.

- Other Errors: A variety of other factors can introduce error, including varying space conditions, sensor variability, inconsistencies in PlanetScope spatial resolution, and artifacts from resampling PlanetScope data to 3 m.

- Bright Targets: We observe lower confidence scores for small bright targets due to a combination of georeferencing offsets and larger radiometric differences which occur with higher frequency in our SkySat and PlanetScope training image pairs.

Training Details

The model was trained for an initial 100 epochs using an Adam optimizer with an initial learning rate of 0.02 for both the generator and discriminator. As in the original ESRGAN paper, we initially used 23 RRDBs, each with 64 filters, to form the backbone of our network. The model was initially trained using 8-band PlanetScope inputs and predicted 4-band SkySat outputs. Training began with a warm-up phase using pixel-wise Mean Squared Error (MSE) loss before activating generative training for the remaining epochs. Training was conducted on four NVIDIA T4 GPUs over approximately 10 days.

After initial training, we optimized the model for production by reducing input channels from 8 to 4 bands (Blue, Green, Red, NIR), which maintained performance while improving inference speed. The model was then fine-tuned starting from the best checkpoint of the initial training phase.

We enhanced the model by adding the expected Mean Absolute Error (MAE) prediction layer as an uncertainty estimate. The weights were initialized using the NIR prediction channel to accelerate convergence, and the model was retrained to incorporate this additional capability.

Finally, we adopted an iterative pruning methodology to significantly reduce the network's computational footprint while preserving performance. The final model was quantized to half-precision (float16) for additional speed improvements, enabling deployment on resource-constrained hardware.

Performance Evaluation

Quantitative Metrics

The 1.5x model (3 m → 2 m) was evaluated against our held-out 10,000 image test set from the Planet120K dataset. We measured it using a few different evaluation techniques.

- Learned Perceptual Image Patch Similarity (LPIPS): This is our primary metric for visual quality. Unlike PSNR or SSIM, LPIPS compares images based on feature maps from a pre-trained deep neural network (AlexNet), making it align much better with human perception of similarity. Note that we report 1 - LPIPS so that a higher score indicates better perceptual quality.

- Peak Signal-to-Noise Ratio (PSNR): This metric quantifies pixel-level fidelity by comparing the maximum possible pixel value to the mean squared error. While useful for gauging pixel accuracy, it can be misleading, as high PSNR does not always correlate with high visual quality.

- Structural Similarity Index Measure (SSIM): This metric evaluates image similarity based on luminance, contrast, and structure. It provides a more balanced view than PSNR but still falls short of LPIPS for capturing perceptual nuance.

- Confidence Accuracy: This metric provides a direct comparison between the ground truth MAE and our predicted MAE.

| Metric | Score |

|---|---|

| 1 - Learned Perceptual Image Patch Similarity (LPIPS) | 0.961 |

| Peak Signal-to-Noise Ratio (PSNR) | 33.53 |

| Structural Similarity Index Measure (SSIM) | 0.876 |

| Confidence Accuracy | 0.993 |

Qualitative Analysis: Visual Examples

We selected a few examples and highlighted some of the strengths and weaknesses of our super-resolution output below. Overall we observed strong visual performance for our super-resolution model. There are some limitations due to hallucinations and an inability to reconstruct small objects/features or dense urban areas.

High-Frequency Detail (Chase Center): The model demonstrated a strong ability to reconstruct high-frequency details. In the Chase Center example, previously indistinct text on the building's roof became legible, showcasing the model's effectiveness in creating sharp, realistic features from coarse inputs.

PlanetScope images of the Chase Center. 3 m input (left) and 1.5xSuperRes 2 m (right).

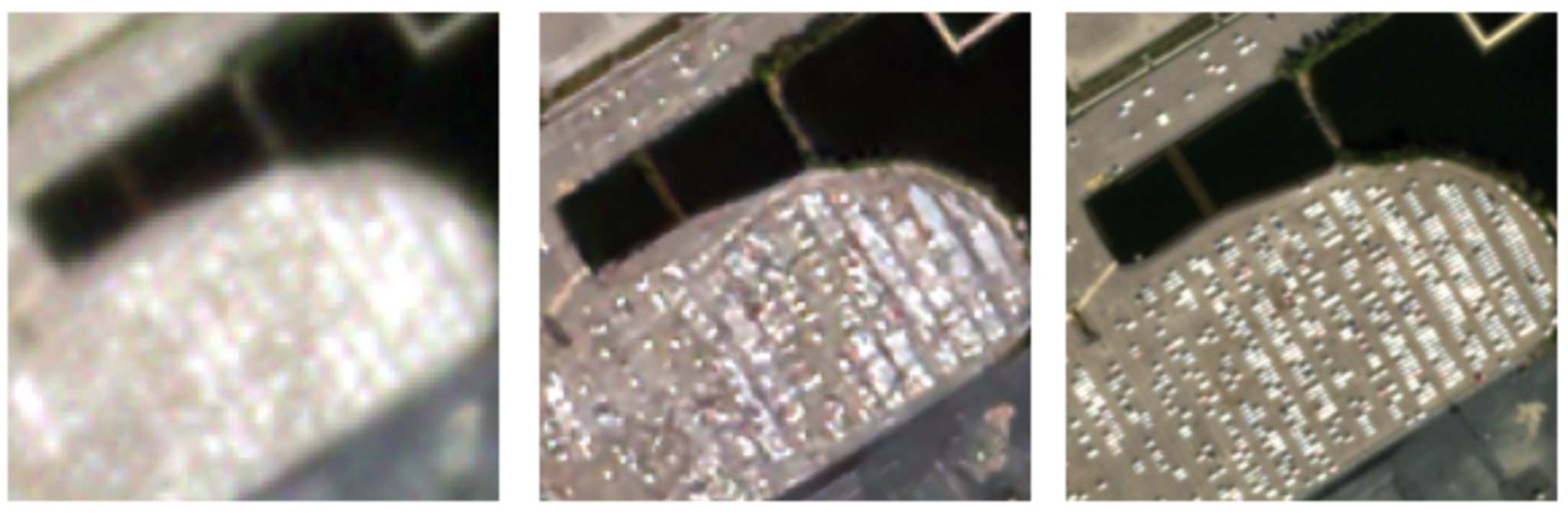

Generative Hallucination (Parking Lot): In scenarios where the input resolution was insufficient to resolve small objects, such as cars in a dense parking lot, the model relied on its generative capabilities. It produced a texture that is plausibly a parking lot but "hallucinates" the individual cars, which may have incorrect shapes or orientations. The Confidence Layer correctly flagged these areas with low confidence, providing critical context to the user.

Planet images of a parking lot. PlanetScope 3 m input (left), PlanetScope with 1.5xSuperRes 2 m (center), and SkySat 0.5 m ground truth (right).

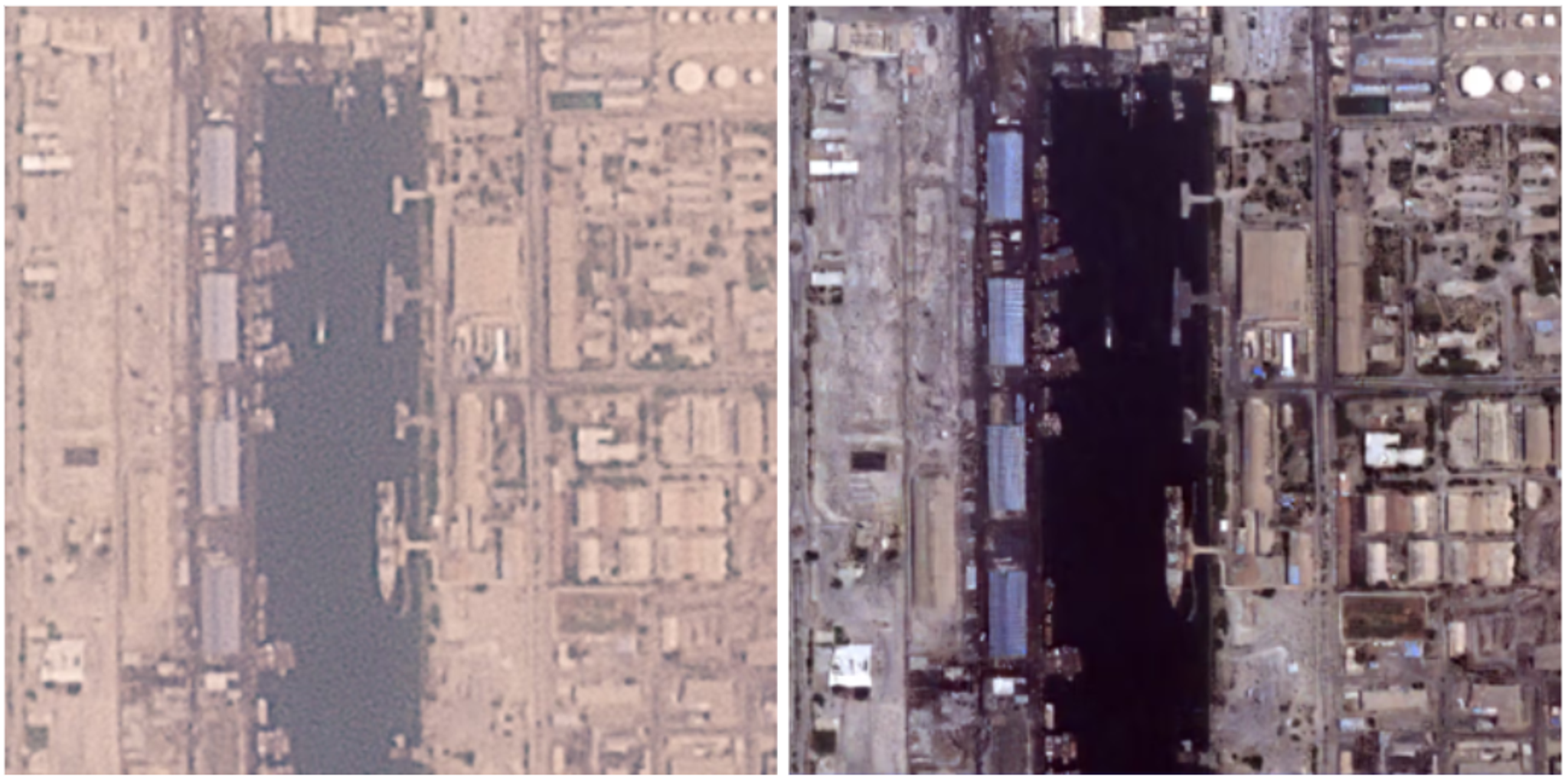

Complex Geometry (Shipping Port): Shipping ports present a challenge with their intricate and repeating geometric patterns (cranes, shipping containers) and varied surfaces. The model performed well in sharpening the large, well-defined edges of ships and container stacks. However, finer details like individual cranes or rigging may show some distortion, highlighting the boundaries of the model's ability to reconstruct highly complex, man-made structures.

PlanetScope images of a shipping port. 3 m visual product (left) and 1.5x SuperRes result (right).

Confidence Layer

In the visualization, confidence was represented using a Red to Blue color scale. We gradually increased the color scale transparency at high levels of confidence so you can still see the predicted super-resolution image at these locations. Dark red indicates low confidence, and a transparent pixel indicates high confidence.

1.5x SuperRes 2 m (left) and 1.5x SuperRes 2 m with confidence layer (right).

Basemaps

Super-resolution basemaps are currently under active development, leveraging the expertise Planet has gained from producing our Global Visual Basemaps over the past decade. We apply key learnings from that product to generate these new super resolved mosaics. The process begins by selecting the best scenes published over a given area and time period using a "best-on-top" ranking algorithm. The SuperRes model is then applied to enhance these select scenes. Finally, the outputs are mosaiced and color-balanced to create a clean, mostly cloud-free high-resolution result. These basemaps feature a maximum zoom level of 17, corresponding to a 1.2 m pixel size.

Conclusion

Planet SuperRes successfully demonstrates the power of a fine-tuned ESRGAN model when paired with a massive, high-quality, proprietary dataset like Planet120K. Our quantitative metrics, particularly the high LPIPS score, confirm that the model produces perceptually realistic imagery that significantly enhances spatial detail, upscaling 3 m PlanetScope data to a sharper 2 m resolution.

The introduction of the Confidence Layer is a key innovation, providing users with a critical per-pixel assessment of the model's output. This layer effectively flags areas where the model may be "hallucinating" or struggling to reconstruct fine details, adding a vital layer of transparency and reliability for analytical applications. While limitations persist in resolving dense, complex features, the model excels at enhancing structural details and overall image clarity, unlocking new potential for visual analysis across various industries.

As we continue to refine the model and expand applications like our super-resolution basemaps, Planet SuperRes stands as a robust solution for delivering enhanced satellite imagery at scale, bridging the gap between resolution and accessibility.

Interested in learning more? Reach out to us at super-res@planet.com — we'd love to hear from you!

Ready to Get Started

Connect with a member of our Sales team. We'll help you find the right products and pricing for your needs.