Top Takeaways from the Satellite Interoperability Workshop

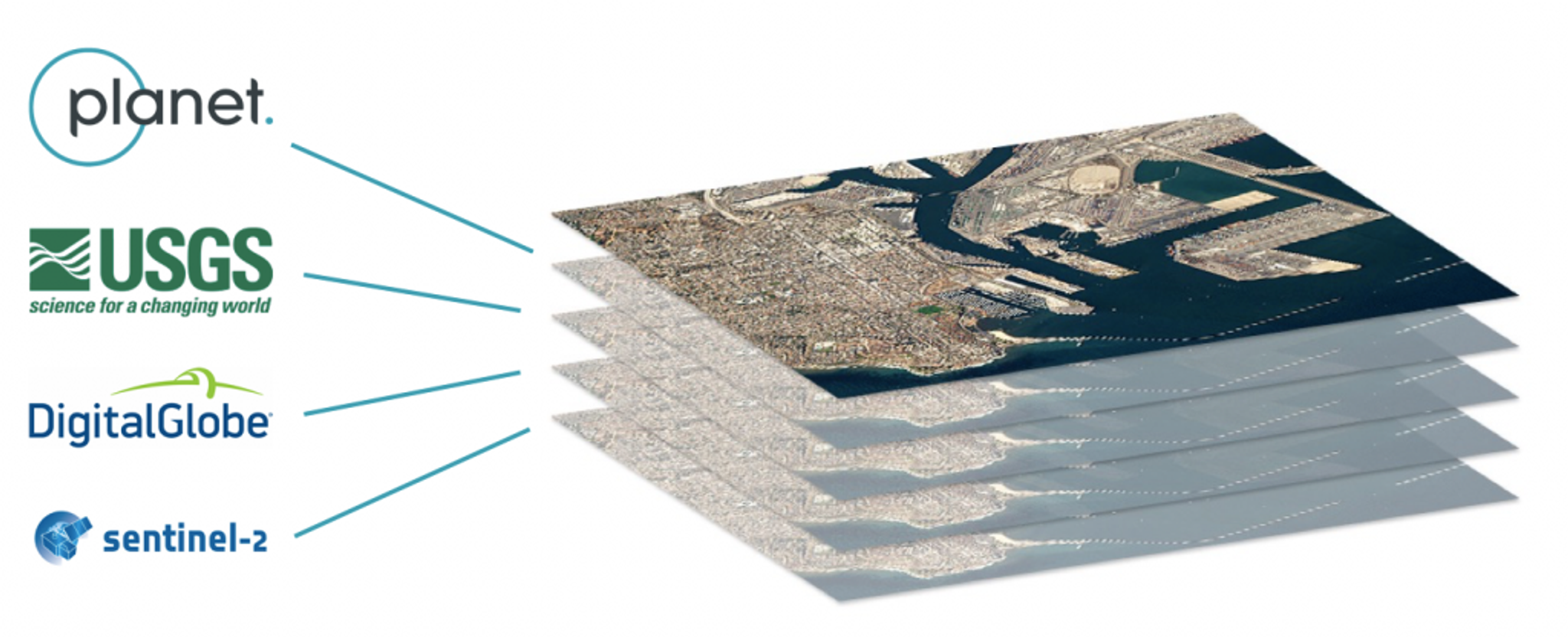

About a month ago, more than 100 leaders from the geospatial industry, government, and NGOs gathered at the USGS Menlo Park campus to discuss one of the biggest questions facing the Earth observation field: is the data from different satellites “interoperable,” or exchangeable, with one another? How much effort are we spending trying to make them appear interoperable? How much of this work constitutes getting data ready for analysis? Is analysis-ready data (ARD) the same thing as a interoperable data? What’s the big deal with interoperability? For one, despite the incredible power of satellite imagery, it remains a relatively niche data source because of the difficulty in turning raw pixels from different sources into actionable insight. Even the most seasoned users of satellite data are forced to search for data across different locations and massage the imagery in order to perform analysis, and there’s no standardized definition of “analysis-ready data”, or ARD, to which practitioners adhere. This lack of standardization is even more of an urgent issue as vast archives of EO data head into advanced machine-learning-based pipelines for data mining, where lots of compute resources are wasted effectively harmonizing the data, but result in non-portable models and classifiers. Convened by Radiant.Earth and sponsored by a host of companies, including Planet, the workshop spanned three days. Attendees traveled from around the world for the opportunity to discuss and debate different definitions and approaches to ARD and interoperability.

- Interoperability is a Measurement of Quality. The way that practitioners assess if data is good is by comparing it to another source of known quality, like Landsat or Sentinel data. It is core to what makes data usable or ready-for-analysis.

- ARD Isn’t the Same as Interoperability. While the terms are often used interchangeably, the processes that make data ready for analysis are not necessarily what make it interoperable. Sentinel and USGS have ARD products that are not directly interoperable until you do harmonization around either standard.

- ARD and Interoperability Require Standardization. In practice, making data ready for an analysis – which is yet to be defined – hinges on precisely defining standards for the behaviors of the resulting products.

- Standardized ARD Will be a Cornerstone of Commercial Data. Creating harmonized data around standards is something that commercial providers must invest in and open up. At Planet, providing data in a form that is seamlessly integrated with public data is a cornerstone of our product roadmap for 2018-2019.

- Harmonization of Industry Roadmaps and SLAs will be needed. Aligning the readiness of different levels of processing, new mission conops, launch manifests as well as the implementation of standard will happen over time, but more complex products require that this happens in a coordinated fashion. You can combine products that are not available at the same time in a given year or even at the same time during the day after its acquisition.

- Tapping into the Groundswell. While seemingly esoteric, ARD and interoperability are indeed hot topics in the industry; the workshop showed that there are many independent efforts to make commercial data like Planet’s interoperable with public sources. These should continue to be consolidated in the open.

Exciting times are ahead: attendees left the workshop ready to continue to take action on many fronts. Some folks left planing to embrace STAC as a standard in commercial platforms as well as OpenDataCube and other standards that already exist. Working groups are planned to start harmonization in the open-source context; government players are taking their enthusiasm back to their agencies; and groups like Open Geospatial Consortium are folding the workshop outcomes into future publications. There was also renewed enthusiasm to get behind the efforts of USGS and ESA in aligning industry-wide standards around upcoming Sentinel and LANDSAT products – such as Level 2 ARD, Collection 2 LANDSAT release. For the full conference agenda, click here or go ahead and dive deep into our YouTube Channel and watch the presentations.

Ready to Get Started

Connect with a member of our Sales team. We'll help you find the right products and pricing for your needs